The transition from traditional data security approaches to modern data centric and identity first approaches is finally gaining momentum, as organizations are forced to throw out costly and resource intensive ways of doing things in the current economic environment.

The most archaic data security approach being targeted is at its foundation – data classification. Historically organizations may have tasked an army of people to gather information on the data inventory of the organization. I myself remember the excitement I had when I created a workflow in Archer to automate the process of gathering this information. A central place to find all this valuable information!!!! But like you’ve undoubtedly experienced, these manual efforts often result in failure because of the reliance on users with insufficient training or knowledge of the organization, systems, security requirements or data within the systems.

Even for organizations that focus more on scanning and classifying all the data within data stores, they struggle to do this classification in a usable time frame, with the resource intensive approaches in use – often taking several months to complete. To further complicate matters, the consumption based model implicit in adoption of the cloud has added a price tag to each operation performed on the data.

Excess time and cost spent for yet more false positives

Traditionally, data classification has involved scanning all data within a data store and then applying security labels to the data store. These security labels are then supposed to direct further data retention, data access and data protection policies that can help reduce the risk related to data exfiltration.

However, this scan-everything-classify-once methodology has proven to be both unnecessarily time consuming and expensive – particularly in the cloud where read or write on the data is charged per operation. This broad-brush approach necessitates sifting through substantial data volumes, regardless of whether users will ever access those data points or whether it is statistically likely that sensitive data will be found. All at a cost.

When you consider that an enduring challenge in automated data classification is the occurrence of false positives (data is mistakenly flagged as sensitive or classified), the indiscriminate scrutiny of entire data repositories also further magnifies the number of false positives. The absence of contextual understanding and statistical relevance amplifies the risk of erroneously identifying data stores as sensitive, based on a false positive. Consequently, valuable and expensive security resources are often diverted to protect data that might not necessitate heightened security or immediate action.

Without identity context, remediation falls short

Regardless of the existence of false positives, organizations are challenged with understanding what the best course of action is once data within a data store has been classified, unless they have additional context on who has access to the data, and how it is being used.

A few simple thought experiments to demonstrate this.

What is the best way to reduce your data risk when:

| Scenario 1: You identify sensitive data in a data store | Scenario 2: You identify sensitive data in a data store | Scenario 3: You identify sensitive data in a data store |

The only option in each of those scenarios is to do more assessment. Contrast that with some additional context about the permissions and operations.

| Scenario 1: You identify sensitive data in a data store. All the data within the data store has not been used in over 18 months. | Scenario 2: You identify sensitive data in a data store. The only users with access to the sensitive data are admin users and a backup service account. Only the backup service account has made any operations involving the sensitive data. | Scenario 3: You identify sensitive data in a data store. An IAM group provides everyone in the organization with access to the sensitive data, but only a few users have actually used the data in the last 12 months. |

As you can clearly see from the scenario, a clear course of action is almost immediately visible with additional context, whether it is archiving the data store and removing access, removing the data, including from the backup or creating a more appropriate group to manage access.

But instead, thousands of organizations are struggling to make any meaningful change to their data security after a heavy investment of time and money in completing classification of their data. All of them wondering what to do with this data inventory, how to keep it updated, and what the security value is?

The solution? A modern approach to data security that understands the challenges from data operations and considers not just the data sensitivity, but also the intersection with identity and its use within the organization.

Modern Data Security in Action with Symmetry DataGuard

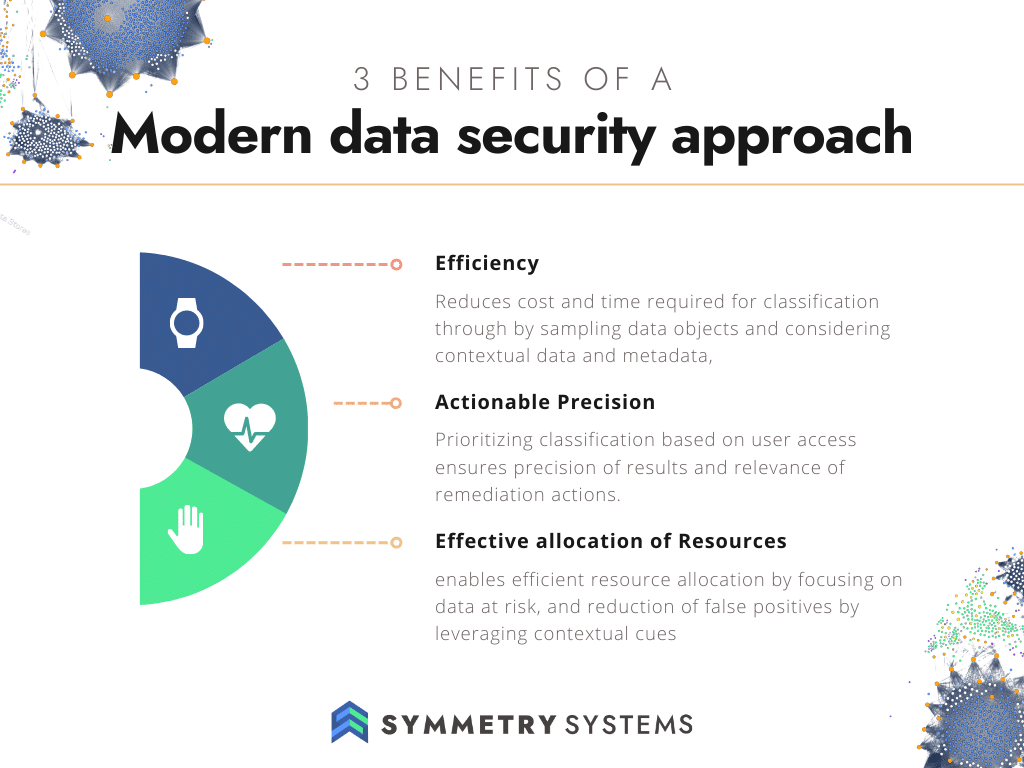

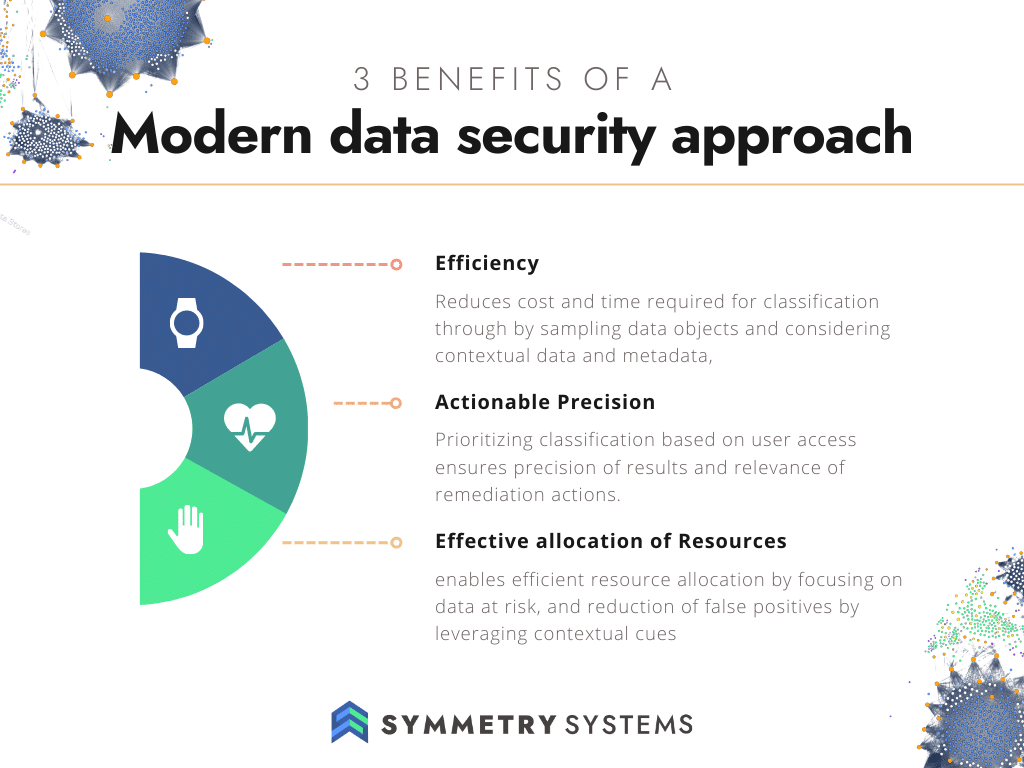

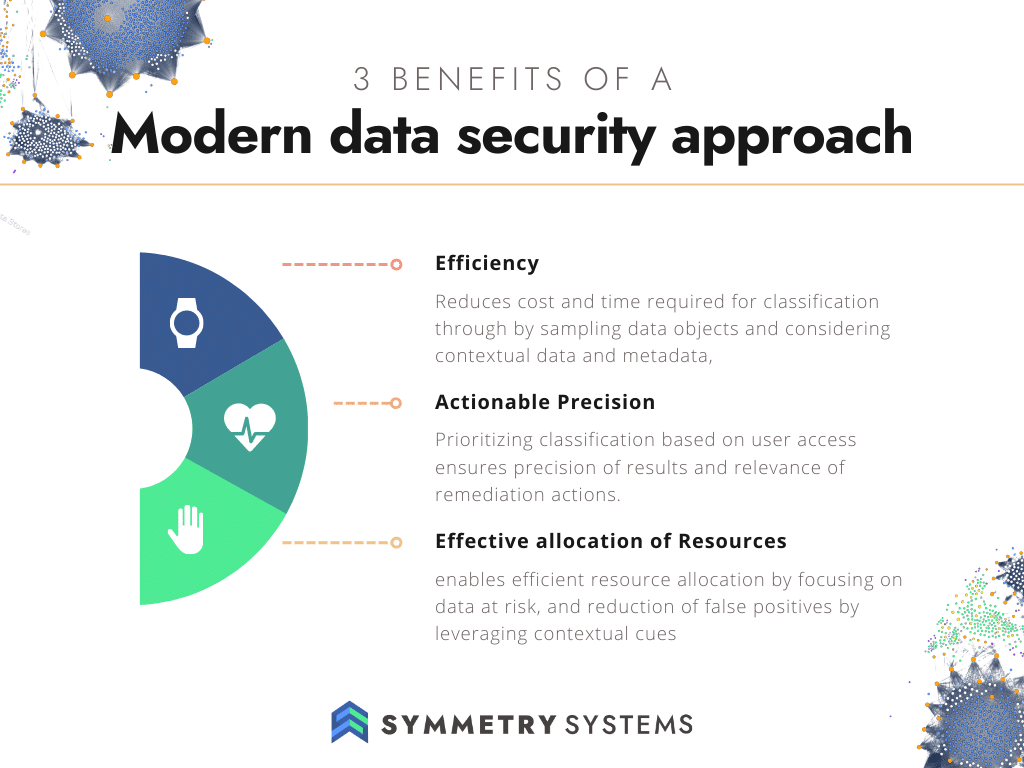

Our Symmetry DataGuard product embodies the modern data security approach, utilizing cutting-edge techniques to maximize security while minimizing costs. Through intelligent data object sampling, context aware classification and identity first prioritization, we not only streamline the classification process and reduce false positives, but enhance the remediation process – optimizing resource allocation and enhancing security efficacy.

Advantages Amplified: The Modern Approach

At Symmetry Systems, we apply the concept of data objects, the smallest unit of data that can be secured through identity controls. Data objects are the logical constructs of data that access can be restricted to, encompassing granular data portions like files, rows, fields, columns, records and views. Instead of scanning entire data stores, we focus on these data objects and take statistically relevant samples (“intelligent sampling”) of data within the data objects to form a unique view of the intersection between data and identities. We further address the false positive challenge head-on by integrating context awareness into the classification process. Contextual understanding involves considering the broader data environment, encompassing attributes such as row and column headings in relational databases, semantic context around the actual classification, metadata based on other classifications on the target data object, as well as the source and destination of the data. By factoring in these contextual cues, the modern approach reduces false positives and elevates the precision of classification.

This context-awareness not only sharpens the accuracy of data classification but also optimizes resource allocation. By focusing efforts on truly sensitive data, false positives are minimized, and resources are channeled more effectively, resulting in substantial cost savings. The sampled data is then further prioritized based on not only the identification of sensitive or classified data, but also its relevance to specific types of high risk users and the organization’s ability to control access.

Given the current economic circumstances, it is imperative that CISO’s use every opportunity to maximize security while minimizing costs. In my opinion, evolving to modern data classification approaches marks a significant leap forward in data security and is a must-have for any organization that has data that is worth protecting. Through data object sampling and prioritization based on user access, the modern approach exemplified by our DataGuard product demonstrates how strategic security enhancement can be achieved with remarkable efficiency. As data continues to play a pivotal role in our digital landscape, embracing precision, relevance, and protection in tandem remains essential for safeguarding data.

To learn more about our modern data security approach using DSPM or see a DSPM solution in action, please reach out. We’d love to show you how Symmetry DataGuard can help improve your data classification processes.